Artificial intelligence (AI) is significantly reshaping the landscape of content creation by enhancing efficiency and providing novel approaches to generating material. This evolution comes with a set of compliance challenges that content creators and compliance officers must address. AI compliance pertains to adhering to laws and regulations governing AI use, particularly in the context of content generation. For global brands, ensuring that practices align with relevant standards while maintaining transparency and accountability in AI systems is essential.

The regulatory landscape is rapidly developing, with frameworks like the EU AI Act and various U.S. initiatives setting forth guidelines for appropriate use of AI. Understanding these frameworks is paramount for maintaining compliance and minimizing legal risks. As organizations increasingly adopt AI technologies, they must also consider ethical implications, including issues related to bias prevention and data privacy, which significantly impact the trustworthiness of AI-generated content.

This article explores the complexities of AI compliance in content creation by examining regulatory requirements, ethical considerations, best practices for implementation, and tools designed to facilitate compliance efforts.

Understanding AI Compliance in Content Creation

AI compliance in content creation encompasses the regulations and ethical standards governing the utilization of AI tools for generating, managing, and distributing content. The significance of compliance is underscored by the need for transparency, particularly given the immense volume of content created through AI technologies.

A key regulatory framework influencing compliance is the EU AI Act, regarded as the first comprehensive legal framework for AI. Set to be fully applicable by August 2, 2026, this legislation mandates that AI-generated content be identifiable and appropriately labeled, especially in contexts that impact public interest, such as deepfakes. In the United States, President Biden's 2023 executive order initiated the formulation of guidelines for labeling AI-generated content, requiring organizations to provide clear and transparent information regarding the origin of their generated materials.

A comprehensive understanding of AI compliance necessitates recognition of the implications stemming from these evolving regulations. They guide content creators in executing practices that not only meet legal standards but also foster consumer trust.

Infographic summarizing the key features of the EU AI Act (Source: Keepabl)

Navigating Global Regulations for Content Creators

In a global context, varying regulatory frameworks require content creators to be adaptable. For example, the EU AI Act necessitates that generative AI content complies with specific labeling and transparency standards, which are essential for ensuring accountability in the European market.

In the United States, regulatory efforts have focused on establishing AI labeling initiatives. Notably, the California AI Transparency Act mandates that AI providers with over one million monthly users implement detection tools for AI-generated content by January 1, 2026. This combination of federal and state-level regulations creates a multifaceted compliance landscape.

It is imperative for content creators to remain informed about these regulations and their applicability to their operations. Non-compliance can lead to significant legal repercussions, emphasizing the need for organizations to proactively address regulatory requirements through structured compliance processes.

Flowchart illustrating AI compliance monitoring workflow (Source: ResearchGate)

Flowchart illustrating AI compliance monitoring workflow (Source: ResearchGate)

Ethical Considerations in AI-Generated Content

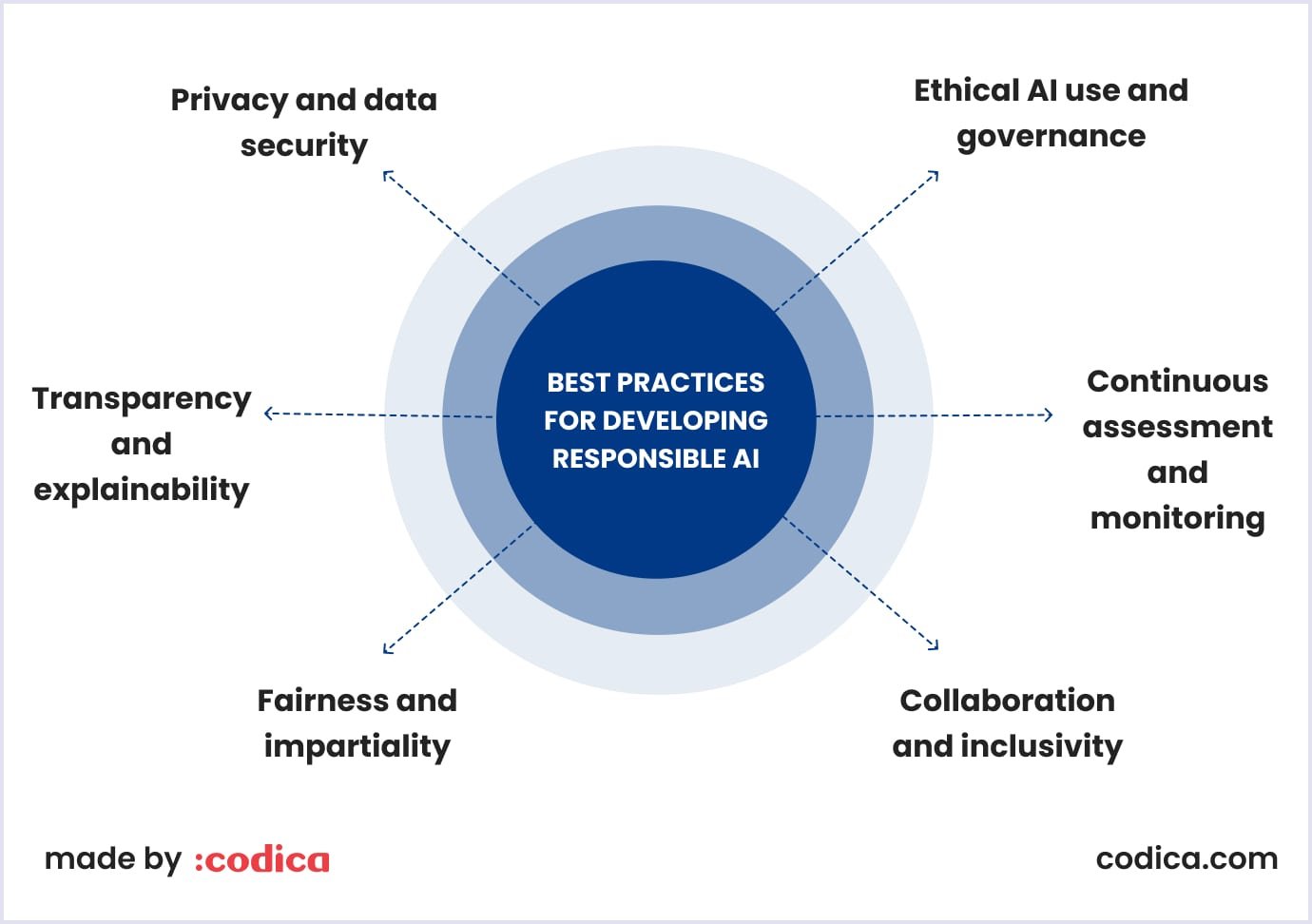

AI compliance transcends legal requirements and delves into ethical considerations that underpin trust in AI-generated content. Crucial ethical principles such as bias prevention, transparency, and accountability are essential to the responsible use of AI technologies.

Bias prevention is a prominent concern, as AI models trained on non-diverse datasets may inadvertently perpetuate existing biases. Regular audits are advised to assess and rectify these biases within AI-generated content. Furthermore, transparency measures—such as disclosure regarding the use of AI tools and clarity about data sourcing—help build public trust.

Establishing accountability mechanisms is equally important, as identifying responsibility in the case of errors or misrepresentation becomes increasingly critical. Content creators must ensure that human oversight is integrated into the AI content generation process, particularly in sectors where precision and reliability are paramount, such as journalism and healthcare.

Research has consistently shown that organizations that adhere to ethical standards tend to foster higher levels of public trust and compliance with legal requirements.

Table summarizing best practices for ethical use of AI (Source: Codica)

Table summarizing best practices for ethical use of AI (Source: Codica)

Best Practices for Implementing AI Tools Responsibly

Successfully navigating the complexities of AI compliance requires organizations to adopt effective best practices when integrating AI tools into content workflows. Establishing a comprehensive governance framework is a pivotal step, outlining ethical guidelines, compliance measures, and monitoring mechanisms for AI applications.

Conducting thorough data audits and preparing clean datasets are essential for ensuring the quality and integrity of AI training data. Organizations should implement robust methods for data collection and management to prevent biases from affecting AI-generated content.

Regular reviews of AI-generated outcomes enhance quality and help organizations maintain adherence to evolving regulations. Furthermore, human oversight must be incorporated into the AI content generation process, ensuring critical content receives appropriate scrutiny before publication.

Various industry case studies suggest that organizations prioritizing these best practices not only minimize legal risks but also maximize the effectiveness of their AI tools.

Checklist highlighting best practices for implementing AI tools (Source: Punktum)

Checklist highlighting best practices for implementing AI tools (Source: Punktum)

Leveraging AI Tools for Compliance in Content Creation

Emerging AI compliance tools present innovative solutions for organizations aiming to maintain adherence to content creation standards. Compliance automation software can streamline routine tasks, enhancing accuracy and efficiency in audits and document reviews.

Increasingly, organizations are adopting AI governance tools that provide checks for regulatory adherence, bias detection, and impact assessments of AI-generated content. Additionally, automated content scanning tools facilitate the review of large volumes of generated content for compliance issues, significantly reducing the time and labor involved compared to manual reviews.

Case studies illustrate that organizations utilizing these automated tools typically experience lower compliance costs and improved content production timelines. By integrating AI technologies into their compliance initiatives, content creators can enhance operational efficiency while ensuring adherence to legal standards.

Comparison chart of various generative AI tools for compliance (Source: Datamatics)

Comparison chart of various generative AI tools for compliance (Source: Datamatics)

Addressing Challenges in AI Compliance: Data Privacy and Regulatory Changes

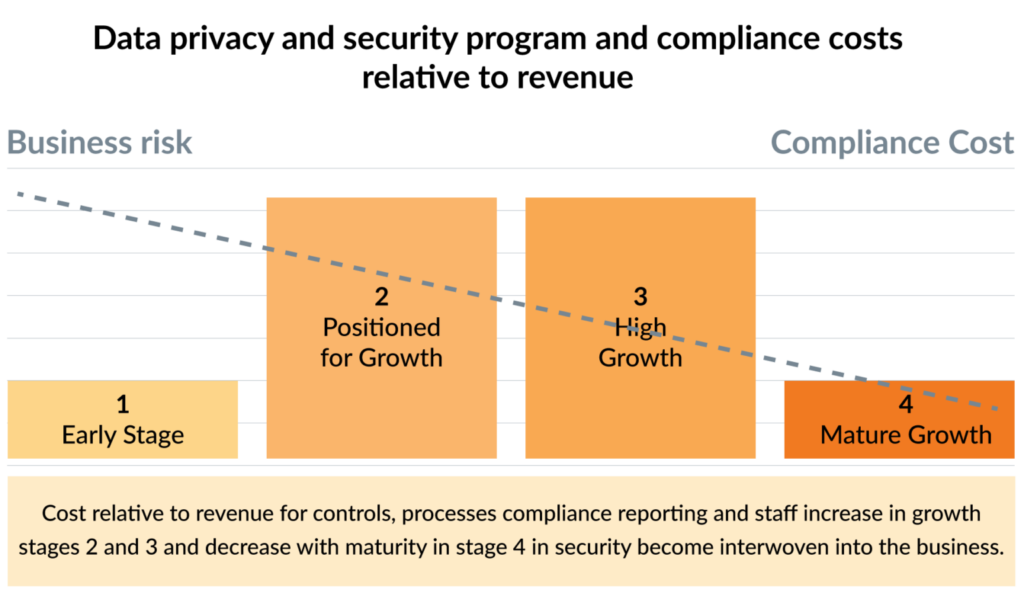

Organizations today confront considerable challenges related to data privacy, technological constraints, and evolving regulations. For instance, compliance with laws like HIPAA is mandatory for AI systems handling patient data in the healthcare sector. Reports indicate that a significant percentage of healthcare organizations utilizing AI are still in the process of establishing proper governance frameworks.

In financial sectors, adherence to stringent data privacy laws such as the General Data Protection Regulation (GDPR) and California Consumer Privacy Act (CCPA) is critical. Institutions utilizing AI for fraud detection must ensure that customer data remains protected. Studies indicate that implementing privacy-preserving AI technologies can lead to increased compliance costs.

Technological limitations further complicate compliance efforts, as AI systems may struggle to grasp nuances and context, which can result in potential regulatory failures. Organizations must remain adaptable in light of emerging regulations, particularly those regarding AI-generated content.

Research demonstrates that organizations proactive in developing compliance mechanisms significantly mitigate legal exposure, highlighting the importance of ongoing awareness of regulatory developments.

Graph displaying changing compliance costs related to data privacy over time (Source: Aprio)

Graph displaying changing compliance costs related to data privacy over time (Source: Aprio)

A Future-Proof Approach to AI Compliance in Content Creation

Adopting a future-proof approach to AI compliance involves organizations developing long-term strategies centered around documentation, collaboration, and regulatory awareness. Implementing systematic audit trails aids in documenting compliance, providing a reliable record in the event of external scrutiny.

Cross-departmental collaboration is essential, as legal, compliance, IT, and content creation teams need to work in tandem to navigate the complexities surrounding AI technologies. This cooperative effort can enhance organizational resilience amid changing regulatory landscapes.

Training employees on AI ethics and compliance considerations emerges as another crucial strategy. By equipping staff with knowledge on the evolving regulatory landscape, organizations can foster an environment of ethical responsibility.

Recent insights suggest that organizations prioritizing compliance-oriented training initiatives often see enhancements in operational practices, ultimately contributing to reduced violations and improved public trust.

Roadmap graphic for developing a sustainable compliance strategy for AI (Source: Auxilio Bits)

Roadmap graphic for developing a sustainable compliance strategy for AI (Source: Auxilio Bits)

In conclusion, AI compliance in content creation requires navigating a multifaceted regulatory environment while integrating ethical considerations and best practices into organizational frameworks. By leveraging emerging tools and adopting proactive strategies, content creators and compliance officers can ensure compliance and enhance public trust in AI-generated content. A commitment to responsible AI usage establishes a foundation for advancements in the field, maintaining transparency and accountability as core principles throughout the process.

Komentarai (0)

Prisijungti norėdami dalyvauti diskusijoje arba .