As a content creator or digital marketer, navigating the intricacies of artificial intelligence (AI) can be both exciting and challenging. AI has transformed how we create and engage with content, yet it has also raised important questions about trust and ethics. To build and maintain that trust, transparency must be at the forefront of your AI practices. Transparency encompasses openly communicating how AI is used in content development, which fosters a more accountable relationship with your audience.

In an age where skepticism toward automated content is growing, you need to ensure your audience understands the role of AI in the materials you provide. Research indicates that 78% of educators believe transparency in AI tools is vital for maintaining trust. Furthermore, organizations that adopt clear AI disclosure policies report a 23% increase in user trust scores. By embracing transparency, you not only enhance audience engagement but also adhere to ethical guidelines that are increasingly essential in digital communication.

This guide offers actionable strategies to help you foster trust through transparent AI practices.

Why Transparency in AI is Key to Ethical Content Creation

When discussing transparency in AI content creation, it's essential to understand what it means. Transparency refers to clearly communicating to your audience how AI contributes to content development. This practice is crucial for building user trust, a key driver of engagement.

User trust impacts metrics such as shareability, repeat visits, and loyalty. Ethically, the implications of failing to be transparent can lead to perceptions of deception or bias in the content. If users understand how AI affects the content they consume, they are more likely to trust and engage with that content.

As a practical example, organizations that prioritize transparency often find that their audiences respond positively. Offering insight into how AI shapes outcomes allows for informed consumption, which can ultimately strengthen relationships.

An infographic demonstrating how transparency in AI relates to user trust and engagement metrics. (Source: Hartman Group)

Establishing Clear Disclosure Practices for AI-Generated Content

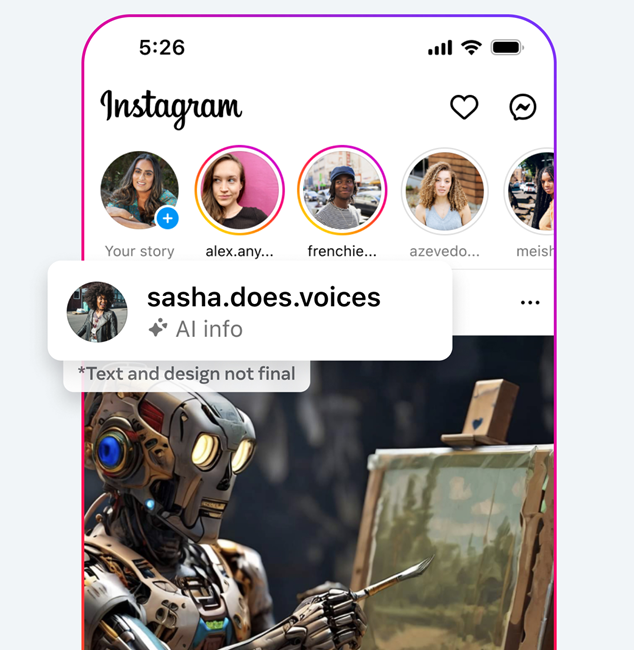

To cultivate trust through transparency, establishing clear disclosure practices is indispensable. Start by labeling your AI-generated content, making sure to include visual cues like tags, watermarks, or icons that signal when AI has been involved. This approach is not only ethical but essential for audience engagement.

Familiarize yourself with relevant regulations, such as the European Union's AI Act, which requires transparency through labeling and watermarking. Emerging laws in various regions, like those set to go into effect in California by January 1, 2026, further underscore the importance of these practices.

Consider the case of Meta, which has implemented in-stream labels for AI-generated content on platforms like Instagram based on user feedback. This type of initiative demonstrates that organizations can adapt their practices based on audience needs and regulatory requirements.

A screenshot showing Meta's labeling of AI-generated content on Instagram platforms. (Source: FoneArena)

A screenshot showing Meta's labeling of AI-generated content on Instagram platforms. (Source: FoneArena)

Educating Audiences About AI: A Pathway to Trust

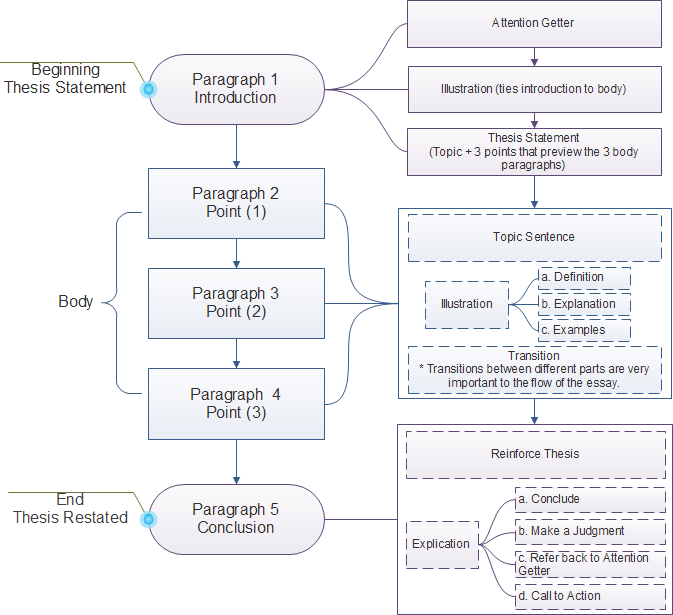

An essential component of fostering trust is educating your audience about AI's capabilities and limitations. You can achieve this by developing resources that clearly explain how AI influences content generation.

Interactive tutorials that allow audiences to engage with AI concepts can also enhance understanding and trust. Regular updates on your AI practices and developments will keep your audience informed and involved.

Research has shown that users who are educated about AI's limitations rate AI-generated content 18% higher in quality compared to those without such knowledge. Moreover, platforms that provide resources about AI have reported a 15% increase in user engagement. To further encourage trust, consider incorporating user feedback mechanisms, allowing your audience to express concerns and ask questions about the AI systems you use.

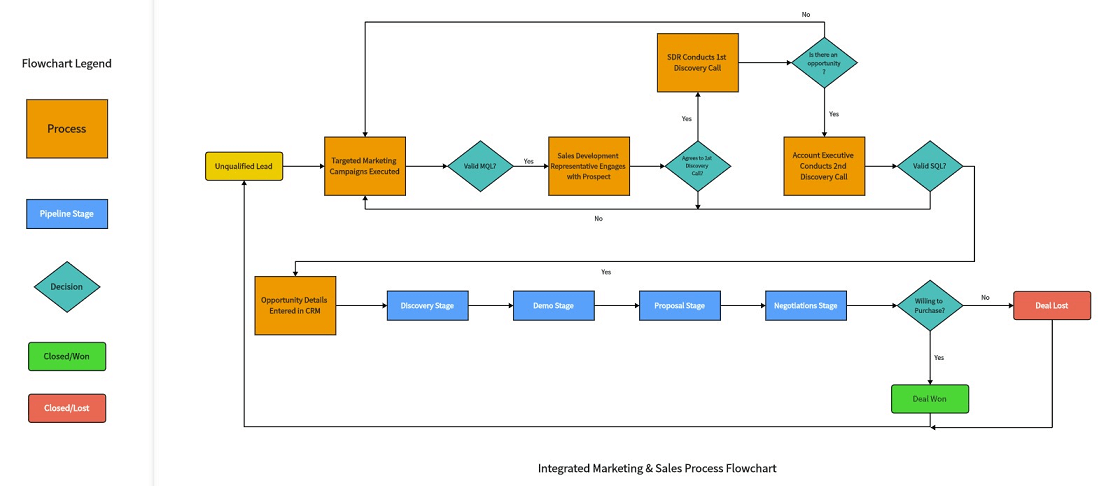

A flowchart that elaborates on different strategies for educating users about AI, enhancing trust. (Source: EdrawSoft)

A flowchart that elaborates on different strategies for educating users about AI, enhancing trust. (Source: EdrawSoft)

The Importance of Human Oversight in AI Content Creation

While AI tools can enhance the efficiency of content creation, human oversight is crucial. It’s important to have structured review processes that ensure quality, accuracy, and contextual relevance.

As you define the roles of AI and human contributors, make accountability a priority. Organizations that implement human reviews for AI-generated content often see higher trust levels among audiences. The California AI Transparency Act emphasizes the necessity of this oversight, urging organizations to maintain responsibility over AI outputs to mitigate misinformation risks.

By developing guidelines for oversight, you can create a clear accountability framework that helps bolster audience trust in the AI-generated content you produce.

An image comparing content created by humans versus AI, emphasizing the need for human oversight. (Source: Marketing Insider Group)

An image comparing content created by humans versus AI, emphasizing the need for human oversight. (Source: Marketing Insider Group)

Bias in AI Content: Identification and Mitigation Strategies

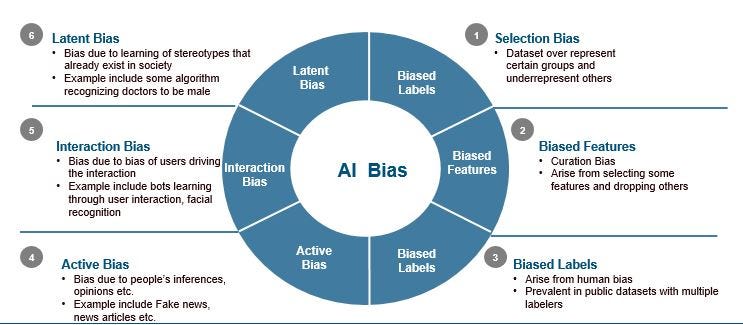

Addressing bias in AI-generated content is essential for promoting fairness and inclusivity. Effective methods for detecting bias include using algorithmic auditing tools such as IBM's AI Fairness 360. These resources enable you to compute fairness metrics and identify any biases present in AI systems.

Creating diverse datasets from various sources and regular assessments of AI output for biased representations will help improve the quality and trustworthiness of your content. Numerous organizations have found success in these practices, and their audiences have responded positively to the commitment to inclusivity.

By actively engaging in bias mitigation strategies, you demonstrate a commitment to ethics and add substantial value to your audience's experience.

Visualization of a machine learning pipeline, demonstrating the steps involved in identifying and mitigating bias in AI content. (Source: Google Blogger)

Visualization of a machine learning pipeline, demonstrating the steps involved in identifying and mitigating bias in AI content. (Source: Google Blogger)

Leveraging Technology for Improved Explainability in AI Content

The complexity of AI models can hinder understanding, making it vital to utilize technologies that enhance explainability. Tools like LIME (Local Interpretable Model-Agnostic Explanations) and SHAP (SHapley Additive exPlanations) can clarify how AI influences content generation, making it more accessible to your audience.

When integrating these solutions, focus on user-friendly platforms that facilitate educational interactions. Evidence shows that organizations deploying such technologies see an 83% reduction in blog drafting time, which allows for more efficient production.

These tools not only enhance transparency but also strengthen the relationship with your audience, showcasing a commitment to clear communication.

An illustration showing feature importance graphs generated by LIME, highlighting how technology supports AI explainability. (Source: Medium)

An illustration showing feature importance graphs generated by LIME, highlighting how technology supports AI explainability. (Source: Medium)

Building Effective Verification Workflows for AI Content

To maintain the integrity of your AI-generated content, it is essential to establish strong verification workflows. These processes should consist of steps confirming the accuracy of AI-generated outputs.

Incorporating tools that allow users to verify the authenticity of content boosts audience trust. By engaging in real-world verification scenarios, you can illustrate the importance of upholding standards for content validation.

A structured approach to verification enables you to combat misinformation while giving your audience the tools needed for critical assessment of the content you produce.

A diagram detailing a step-by-step workflow for verifying AI-generated content. (Source: Boardmix)

A diagram detailing a step-by-step workflow for verifying AI-generated content. (Source: Boardmix)

Conclusion: The Future of Trust in an AI-Driven World

As you navigate the complexities of AI in content creation, emphasizing transparency and ethical practices is crucial. Fostering trust with your audience requires clarity about how AI influences their experience.

Continued education about AI capabilities and limitations is vital for developing lasting relationships with your audience. By maintaining ethical practices alongside user engagement strategies, you can not only enhance user trust but also position your content positively in an ever-evolving digital landscape.

The future of AI in content creation rests on your ability to be transparent. By following these guidelines and adapting to new technological advancements, you can cultivate trust and provide valuable content to your audience.

Comments (0)

Sign in to participate in the discussion or .