Why Governance in AI Matters: The Rise of Ethical Watermarking

AI watermarking involves embedding information into AI-generated content to indicate its authenticity and origin. This technique plays a crucial role in governance frameworks aimed at enhancing accountability and transparency within artificial intelligence applications. The increasing use of AI technologies necessitates regulatory measures to ensure ethical practices.

Recent legislation, notably the EU AI Act, mandates that organizations implement machine-readable watermarks for AI-generated media by 2025. This requirement is designed to provide users with clearer information regarding the origins of content, thereby enhancing trust in digital materials. In addition, the White House Executive Order emphasizes the importance of watermarking as a means of identifying AI-generated content, which sets a benchmark for private sector compliance.

Establishing a standard of trust through effective governance frameworks for AI technologies is essential for user confidence. As stakeholders navigate the complexities of AI accountability, watermarking emerges as a critical tool to ensure a responsible approach to AI-generated content.

An infographic depicting the rise of various regulations concerning AI watermarking in the United States (Source: Tech News Lit)

Decoding AI Watermarking: Strategies for Implementation

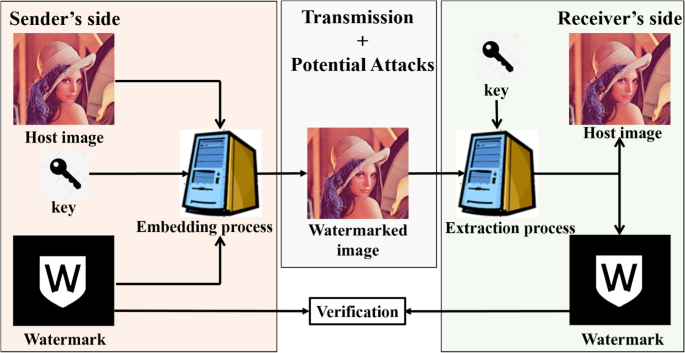

AI watermarking techniques can generally be divided into two main categories: visible and invisible watermarks. Visible watermarks are easily recognizable overlays on images, such as logos or text, which clearly indicate the source of the content. In contrast, invisible watermarks are embedded within the image data itself, often requiring specialized software for detection.

A further distinction exists between robust and fragile watermarks. Robust watermarks are specifically designed to withstand various forms of manipulation, such as compression and cropping, often utilizing advanced techniques like Discrete Cosine Transform (DCT) for embedding. Conversely, fragile watermarks are more sensitive and are used to indicate whether content has been altered or tampered with.

The implementation of these techniques presents multiple challenges. For instance, visible watermarks can detract from the aesthetics of content, while invisible watermarks remain susceptible to sophisticated removal techniques. Research indicates that no watermarking method is entirely immune to persistent removal efforts, highlighting the need for ongoing technical advancements to enhance reliability and effectiveness.

A diagram illustrating the different types of watermarking techniques available, including visible and invisible options (Source: Semantic Scholar)

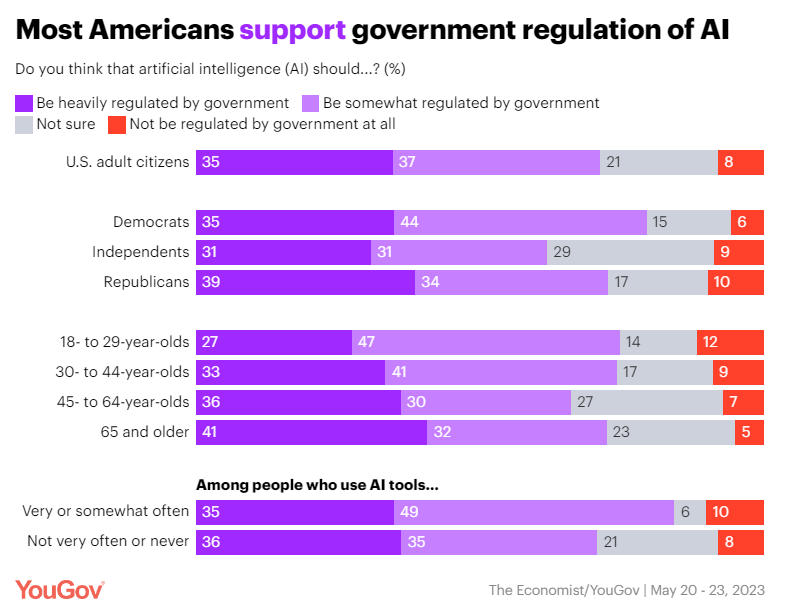

Watermarking as a Compliance Tool: Meeting Regulatory Demands

Integrating watermarking techniques into AI practices is not merely a technical challenge; it is also driven by compliance requirements stemming from emerging legislation like the EU AI Act. This act mandates that AI-generated content must be accompanied by machine-readable watermarks, thus aiming to protect users and maintain content integrity.

Failure to comply with these regulations can lead to significant consequences. For instance, non-compliance with the EU AI Act can result in penalties of up to €15 million or 3% of a company’s total global revenue. In the United States, organizations are similarly encouraged to adopt watermarking as part of a broader strategy to enhance transparency as outlined in the White House Executive Order on AI.

To ensure compliance, organizations are encouraged to adopt best practices that include regular audits of watermarking systems and staying informed about ongoing regulatory developments. These proactive measures not only confirm adherence but also contribute to a culture of accountability and trust within AI-generated content.

A flowchart that outlines the complete digital watermarking system, detailing the compliance process (Source: ResearchGate)

Building Trust Through Ethical Watermarking: A Necessity or a Hurdle?

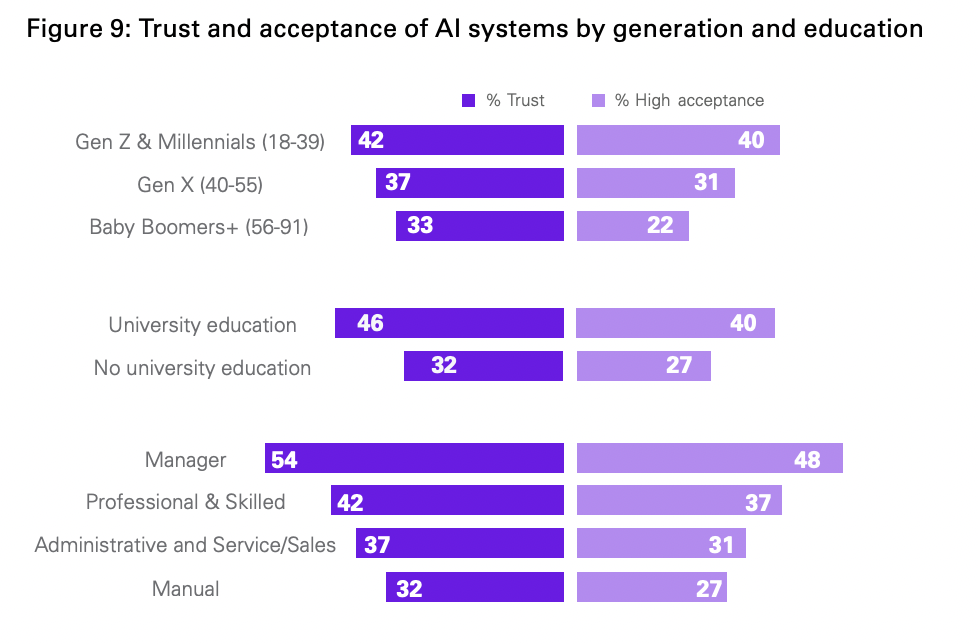

Effective watermarking can significantly enhance user trust in AI-generated content by providing assurances regarding the content's authenticity and integrity. By employing transparent practices, organizations can foster a sense of accountability, allowing users to easily differentiate between genuine and AI-created materials.

However, challenges persist. Studies indicate that users may harbor skepticism towards AI-labeled content regardless of its quality or accuracy, leading to potential erosion of trust. Additionally, privacy concerns frequently arise regarding the information embedded within watermarks, as it may inadvertently reveal identifying details about content creators, affecting their anonymity and security.

These ethical dilemmas underscore the importance of balancing transparency with individual rights. To address these concerns, all stakeholders must engage in continuous dialogue regarding the implications of watermarking practices and the strategies necessary to bolster user confidence in AI technologies.

A graph showing varying levels of trust among users for AI-generated content compared to traditional content (Source: HatchWorks)

Navigating Risks: The Challenges and Consequences of Watermark Adoption

Widespread adoption of watermarking practices is not without potential unintended consequences. One significant concern is that users might develop a false sense of security; although watermarks are intended to protect authenticity, they can also be manipulated or removed, potentially misleading users regarding the genuineness of the content.

Moreover, studies reveal that a notable percentage of users intend to limit their interaction with platforms utilizing watermarking. For example, approximately 30% of ChatGPT users indicated they would reduce their usage if watermarking became commonplace. This resistance may stem from a perceived coercion associated with mandatory labeling, discouraging user engagement.

Other unintended effects include the normalization of AI-generated content labeling, which may impact how audiences perceive both AI-generated and authentic content. This scenario could lead to an environment of increased skepticism across all content types. The challenge for organizations will be to navigate these complexities to maintain user trust while adhering to regulatory demands.

A visual depicting the cycle of user resistance to AI watermarking and the regulatory impact associated with it (Source: Springer Nature)

Future of Content Authenticity: Balancing Innovation with Responsibility

As the landscape of AI technologies continues to evolve, the future of watermarking hinges on the establishment of standard practices across industries. Engaging stakeholders—policymakers, tech companies, and users—is vital for ensuring that watermarking effectively serves both compliance needs and promotes public trust in content integrity.

The proactive collaboration between regulatory frameworks, such as the EU AI Act and the White House Executive Order, signifies a concerted effort to shape effective watermarking practices. Experts argue for the necessity of combining watermarking with other regulatory measures to achieve meaningful content identification and verification.

Striking a balance between fostering innovation and upholding ethical responsibilities will remain a critical objective. Organizations must continually refine their watermarking strategies while remaining responsive to the changing dynamics of public trust and technological advancements.

An abstract image representing the future of content authenticity in the context of AI governance (Source: Adobe)

In summary, watermarking serves as a pivotal mechanism within the governance of AI-generated content, contributing to compliance, trust, and transparency in a rapidly advancing technological landscape. Embracing innovation alongside ethical considerations will ultimately dictate the successful implementation of watermarking strategies and shape the future direction of AI.

Comments (0)

Sign in to participate in the discussion or .