In an era increasingly defined by technological advancements, the need for ethical standards in artificial intelligence (AI) has become fundamental. As AI systems become integrated into content generation processes, ethical concerns surrounding fairness, accountability, transparency, and privacy—collectively referred to as the FATE principles—have gained prominence. These principles guide the development of trustworthy AI systems, assuring content creators, developers, and marketing professionals that the technologies they utilize support equitable practices.

Public trust in AI technologies is fragile; various studies indicate a growing skepticism regarding AI-generated content among consumers. For instance, a recent survey conducted by Pew Research Center reveals that 66% of Americans express concern about content created by AI lacking human oversight. Reports of significant ethical lapses—such as bias and misinformation in AI-generated content—further fuel this concern. Notable incidents, including biases embedded within AI algorithms used in hiring or lending practices, demonstrate that neglecting ethical considerations can lead to severe reputational damage and loss of consumer confidence.

This guide aims to provide insights into implementing ethical practices in AI and ensuring content safety. Key topics include methods to guarantee bias-free AI content, the establishment of guardrails for brand safety, and strategies to align governance with evolving AI standards. An exploration of available tools and algorithms for monitoring AI content ethics will also be discussed. By developing a comprehensive understanding of ethical AI practices, stakeholders can make informed decisions to foster trust in AI technologies.

Understanding Effective Ethical Principles in AI Development

Adhering to ethical principles in AI development is crucial for creating systems that are fair, accountable, and transparent. The FATE framework—Fairness, Accountability, Transparency, and Privacy—serves as a foundational guideline for AI developers and content creators.

Key Ethical Principles

-

Fairness: Achieving fairness in AI systems requires employing diverse data sets, implementing fairness-aware algorithms, and conducting ongoing evaluations using metrics that assess bias.

-

Accountability: Establishing clear governance structures and developing guidelines enables organizations to actively monitor their AI systems. Implementing accountability mechanisms facilitates regular auditing of AI decision-making processes.

-

Transparency: As highlighted by AI expert Adnan Masood, it is essential to ensure that AI systems are comprehensible. Building interpretable models and providing clear documentation of decision-making processes are critical steps in promoting transparency.

Implementation Strategies

Effective ethical implementation relies on various strategies:

-

Data Transparency: It is essential to document data sources and preprocessing methodologies used to identify biases systematically.

-

Fairness Toolkits: Incorporating tools like IBM AI Fairness 360 and Google’s Fairness Indicators allows organizations to assess biases within their AI systems and address them preemptively.

Research from numerous organizations emphasizes that integrating these principles into AI development leads to systems better aligned with societal values, addressing ethical concerns effectively.

Source: LinkedIn

Ensuring Bias-Free AI Content

To create AI-generated content that is equitable and fair, it is essential for stakeholders to recognize the potential for bias and adopt strategies to mitigate its effects.

Methods for Bias Reduction

-

Diverse Data Sets: Collecting data from various demographics enhances the representativeness of AI models, thereby decreasing bias.

-

Fairness-Aware Algorithms: Utilizing algorithms specifically designed to identify and counteract bias can significantly improve the fairness of AI-generated content.

-

Continuous Evaluation: Regular assessments utilizing fairness metrics are crucial for identifying and addressing biases that may arise over time.

A notable instance involves Amazon’s AI recruiting tool, which was discovered to exhibit bias against women, prompting the company to abandon the tool altogether. This case underscores the importance of conducting rigorous testing of AI systems for bias and highlights that organizations must commit to fairness when designing AI applications.

Source: EE Times

Establishing Safety Measures for Brand Protection

As AI content generation continues to grow in prevalence, organizations must prioritize brand safety by establishing ethical guardrails.

Governance Structures

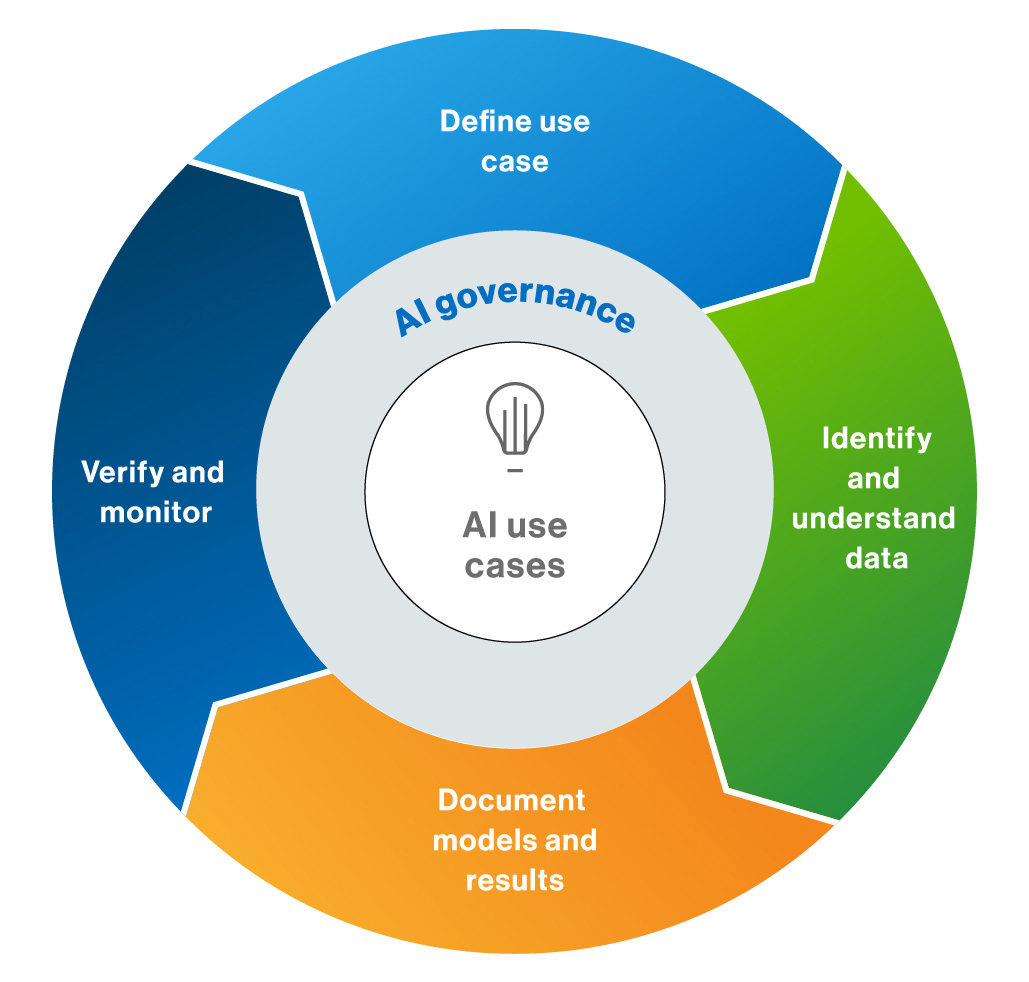

Creating robust AI governance frameworks is vital for maintaining oversight of AI-generated content. Governance frameworks can include:

-

Clear Policies: Organizations should define and establish policies regarding the use of AI technology, encompassing ethical practices and compliance with legal standards.

-

AI Auditing Tools: Utilizing software that audits AI-generated content is essential for ensuring adherence to established ethical standards.

A prominent example is the EU AI Act, which outlines critical safety measures designed to protect brands utilizing AI applications. The Act mandates companies to assess the risks inherent in AI applications before they enter the market, fostering a proactive approach to content safety.

Source: Collibra

Aligning Content Governance With Evolving AI Standards

For content creators and AI developers, aligning content governance with existing ethical AI standards is essential.

Best Practices

Alignment with these standards involves implementing best practices such as:

-

Framework Adoption: Organizations should adopt frameworks established by regulatory bodies, such as the EU, which provide detailed guidelines on ethical AI use.

-

Case Studies: Learning from the successes of organizations like Google and Microsoft, which have integrated ethical frameworks into their operations, can impart valuable insights.

By adhering to these practices, organizations can enhance their governance structures, ensuring consistent compliance with ethical standards.

Source: Meclabs

Tools for Monitoring AI Content Ethics

The availability of tools that assist in monitoring the ethics of AI content is crucial for organizations aiming for compliance and transparency.

Overview of Tools

-

Bias Detection Tools: Software such as IBM AI Fairness 360 and Microsoft Fairlearn empower developers to assess AI models against fairness metrics, ensuring alignment with ethical standards.

-

Evaluation Frameworks: Implementing evaluation frameworks enables organizations to consistently assess AI-generated content for adherence to ethical guidelines.

As companies increasingly integrate AI into their operations, accessing these monitoring tools can help mitigate risks associated with AI-generated content.

Source: ResearchGate

Learning From AI Ethics Failures

Reflecting on past ethical lapses in AI can offer invaluable lessons for shaping future practices in content generation.

High-Profile Failures

Incidents such as Amazon’s hiring algorithm and Apple’s credit lending system illustrate the consequences of ignoring ethical AI practices. Both companies faced significant scrutiny and reputational damage due to algorithmic bias.

Understanding the ramifications of these cases emphasizes the necessity for organizations to establish clear ethical frameworks. Expert Kjell Carlsson highlights the importance of developing guidelines that ensure accountability in AI practices to prevent similar oversights.

Source: UNODC

Societal Impact of Ethical AI Practices

The long-term implications of adhering to ethical practices in AI are profound and deserve careful consideration.

Potential Benefits

Ethical AI practices not only protect individual rights but also promote societal trust. The World Economic Forum predicts that AI will influence 75% of significant societal decisions by 2040, underscoring the importance of understanding the societal benefits of implementing ethical AI practices.

By engaging in discussions about AI ethics, content creators can promote responsible practices that lead to positive outcomes within their communities, emphasizing the importance of ethical discourse.

Source: UNODC

Conclusion

Integrity in AI and content safety is essential for maintaining public trust and ensuring that technological advancements serve society positively. Adopting ethical principles encapsulated in the FATE framework and implementing effective governance structures are critical steps in the integration of AI into content creation practices.

Through continuous assessment, the use of monitoring tools, and the lessons drawn from past ethical failures, organizations can adopt a future-focused approach that fosters equitable and responsible AI deployment. As the landscape of AI continues to evolve, ongoing discourse and adherence to ethical standards will ultimately shape the relationship between technology and society.

By applying these insights, stakeholders can work towards a more trustworthy and ethically sound AI environment that benefits all.

Komentar (0)

Masuk untuk berpartisipasi dalam diskusi atau .