Can I Trust AI-Generated Content? Unlocking Authenticity

As artificial intelligence (AI) continues to shape the landscape of content creation, establishing trust and authenticity has become increasingly important. AI-generated content encompasses various multimedia outputs produced with algorithms and machine learning techniques. This ability to generate diverse formats—including text, images, audio, and video—raises significant questions about the credibility and integrity of such material.

Concerns regarding authenticity arise from the complexity of AI's capacity to mimic human creativity. The pervasive nature of AI-generated content makes it difficult for users to differentiate between genuine material and potentially misleading outputs. Consequently, the implications of watermarking and transparency measures are critical in managing these trust issues. Watermarking functions as a marker embedded in content that signifies its origin, helping users verify its authenticity. This mechanism assures consumers that AI-generated material possesses integrity, fostering a clearer understanding of its source and reliability.

Establishing authenticity in AI-generated outputs not only bolsters user confidence but also highlights the importance of transparency within this technology. Various innovations, particularly in watermarking technology, are paving the way for trust. Notable advancements like Google's SynthID and Microsoft's DALL-E 3 employ watermarking techniques to enhance the veracity of AI-generated content. With a focus on building user trust, integrating watermarking as a reliable measure is essential for ensuring that AI is perceived as a responsible participant in content creation.

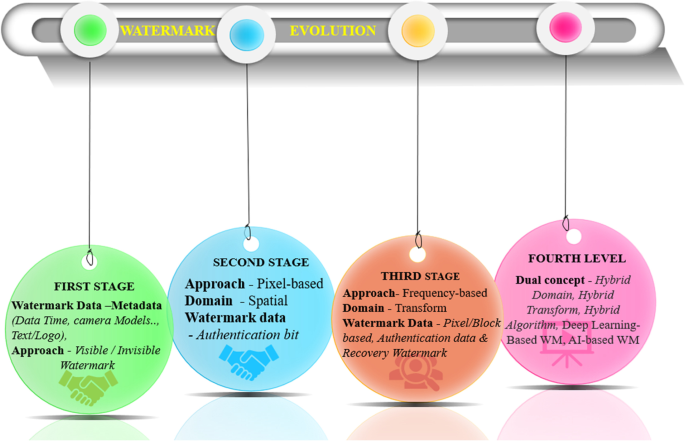

Infographic illustrating various types of watermarking techniques for multimedia content (Source: Springer Nature)

What Assures Authenticity? The Role of Watermarking in Content Verification

Watermarking serves as a sophisticated method for embedding information in digital content to protect and ascertain its origin. Different media formats employ distinct methodologies for watermarking. In text, watermarking typically involves manipulating token probabilities to create preferred "green tokens" while avoiding restricted “red tokens.” This process enhances authenticity but is generally less effective for short texts.

In the realm of imaging, watermarking techniques, such as those utilized by Google's SynthID, employ dual neural networks. These systems subtly modify pixels within an image to embed an invisible pattern and simultaneously detect this pattern to ensure its authenticity. Additionally, platforms like IMATAG and Truepic enhance image integrity through watermarking that either embeds during generation or is applied post-creation. Conversely, audio content utilizes solutions like AudioSeal, which effectively maintains audio quality while embedding watermarks.

Video watermarking practices are designed to remain detectable even after common visual modifications, such as cropping or filtering. These different methodologies are tailored to maintain authenticity across various media formats while presenting unique challenges, such as balancing the strength of the watermark against content quality.

Overall, watermarking technologies must address the challenges associated with different content types. Achieving robustness against tampering while ensuring consistent detectability is crucial for maintaining user trust. As AI-generated content evolves, so too must the techniques employed to secure and authenticate it.

Comparison image showing watermarked and non-watermarked photos for understanding watermarking integrity (Source: Nate Zeman)

Technological Innovations How Blockchain Verifies AI Disclosures

Blockchain technology represents a considerable advancement in content verification, providing transparency and trust in AI-generated content. As a decentralized ledger, blockchain verifies content origins and ensures the immutability of recorded information, which facilitates robust tracking of changes and confirmation of authenticity.

Current applications of blockchain enhance the transparency of AI-generated outputs. An example is the C2PA (Content Provenance and Authenticity) format used in OpenAI's DALL-E 3, which facilitates verification and establishes credentials for AI-generated content. Similarly, platforms like Truepic leverage blockchain principles to append invisible content credentials to images, ensuring their integrity.

Recent innovations highlight the efficiency of blockchain in verifying AI disclosures. Given the growing prevalence of AI-generated content, the demand for secure verification measures is paramount. The intersection of blockchain and watermarking technologies paves the way for a more reliable verification process in the future.

Diagram depicting a document verification system utilizing blockchain technology for authenticity (Source: Blockchain Council)

Why Transparency Matters Ethical Implications of AI in Content Creation

The ethical landscape surrounding AI-generated content is crucial for establishing trust among consumers and stakeholders. As AI technology becomes entrenched in media production and distribution, the expectation for transparency intensifies. Ethical considerations include consumer protection, which ensures that generated content does not mislead or misrepresent information.

Anonymity in AI-generated content poses significant ethical challenges, especially when individuals or organizations exploit technology for deceptive purposes. Transparency measures, such as watermarking, help mitigate risks associated with untraceable content creation. By explicitly indicating AI-generated components, organizations can uphold ethical standards and encourage consumer respect for the technology.

Industry experts underscore the ongoing discussions about the importance of ethical transparency. Sam Gregory, executive director at WITNESS, characterizes AI watermarking as a critical tool for reducing harm in communication. This perspective resonates within the tech community, where regulatory frameworks like the EU AI Act and California AI Transparency Act advocate for ethical transparency, thereby informing users about AI-generated content.

Promoting ethical transparency enables stakeholders to navigate complex social implications while advancing the responsible use of technology. A focus on trustworthy AI practices ultimately benefits all participants in the content creation ecosystem.

Quote by Freeman Dyson emphasizing the ethical dimensions of AI (Source: AZ Quotes)

What Next? Diving into the Regulatory Landscape of AI Transparency

Current regulatory frameworks for AI-generated content are evolving in response to the growing need for transparency and authenticity. The EU AI Act, adopted in March 2024, mandates providers of AI systems to clearly label outputs as AI-generated. This legislation also requires the implementation of watermarking, marking a significant shift towards standardization in the industry.

In parallel, the California AI Transparency Act (SB 942) imposes new obligations on major AI service providers. Effective from January 2026, this law mandates companies to provide AI detection tools for users and disclose the nature of AI-generated content. These regulations carry important implications for compliance and accountability within the technology sector.

However, challenges remain as organizations navigate the requirements for compliance while striving for creativity and innovation. Research indicates that the effectiveness of watermarking techniques varies across media formats, and as the regulatory landscape continues to develop, ongoing collaboration between regulators and industry stakeholders will be essential.

Understanding and adapting to these regulations is vital for AI developers, as they shape the future of ethical practices and accountability in content creation.

Timeline showing important milestones in the EU AI Act regarding AI transparency and regulations (Source: EU AIA)

AI's Future Building Trust through Transparency and Innovation

The future landscape of AI-generated content will see continuous advancements in watermarking and transparency technologies. Predictions suggest that integrating robust watermarking with blockchain solutions will enable organizations to build user trust effectively and authenticate content. The ongoing evolution of these technologies will significantly influence the future of AI content creation.

As ethical considerations gain prominence in the technology sector, collaborative efforts among developers, regulators, and advocates will drive practices that prioritize transparency and accountability. Implementing effective watermarking and blockchain solutions will create a reliable framework, fostering a trusted environment for both creators and consumers.

Investing in responsible AI practices will ensure that technology aligns with societal values and expectations. The path forward indicates a commitment to building trust and credibility within the AI ecosystem, ultimately empowering users and creators alike.

A roadmap illustrating anticipated advancements and innovations in generative AI (Source: Infotech)

This exploration of AI transparency and ethics through content watermarks highlights the critical role of trust, authenticity, and regulatory frameworks in shaping the future of AI-generated content. Stakeholders must remain dedicated to advancing practices that enhance consumer confidence while navigating the complexities of this rapidly evolving technological landscape.

Comentários (0)

Entrar para participar da discussão ou .